I’ve recently completed a research report for Digital Futures for Children Centre at LSE, which reveals evidence that regulation focused on children’s privacy and safety is starting to have a substantive impact, most importantly through changes that design in protections by default, rather than safety after the event.

(this content was also published as a blog for Media@LSE

As concerns have grown about the risks to children in the digital environment, legislators and regulators have responded with new laws and regulatory codes. In the UK this has led to the introduction of the Age-Appropriate Design Code (AADC) in 2021 and the Online Safety Act 2023 (OSA), while the EU responded with the Digital Service Act (DSA), passed in 2022. All these regimes place responsibility on companies to assess risks to children and take steps to address them in the design and governance of their online service.

The public now rightly expect these new regimes to deliver tangible and visible benefits to children’s experiences online, and a reduction in the harms they are exposed to. But how do we assess the impact of these regulatory regimes in practice? Our research project sought to address the current lack of evidence available to answer this vital question. The research reveals a significant impact of legislation and regulation, while recognising that there is no room for complacency, and there is much work still to be done by companies.

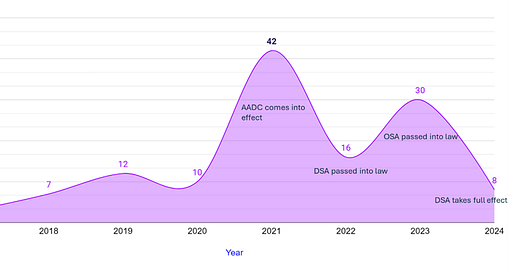

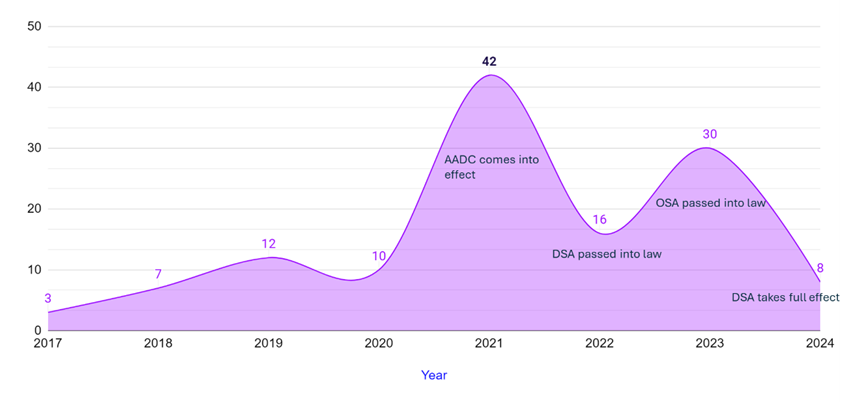

At the centre of our research was an analysis of 128 child safety and privacy changes announced by Meta, Google, TikTok and Snap from 2017 to 2024. The figures in the graph below clearly illustrate a sharp increase in changes as the AADC came into effect – peaking at 42 in 2021 – and rising again as the OSA and DSA are passed into law.

Changes in child safety and privacy changes announced by Meta, Google, TikTok and Snap from 2017 to 2024

“By default” design changes can make a difference

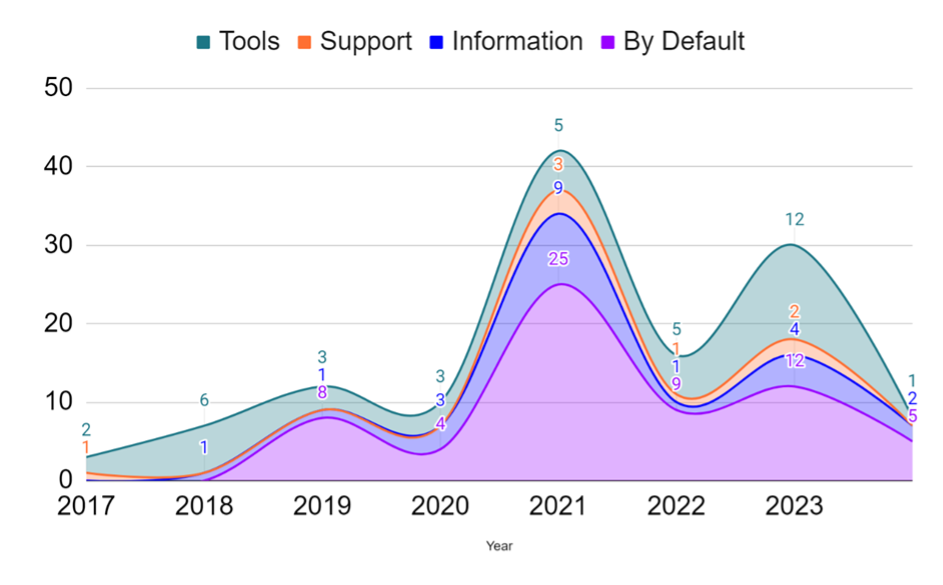

Our research then categorised the changes announced under four headings: ‘by default’, ‘tools’, ‘information’ and ‘support’ based around the requirements of regulation. The data (in the graph below) reveals a spike of ‘by default’ changes in 2021. Plus, a significant volume of these changes continuing after 2021. ‘By default’ requirements are central to the AADC, DSA and OSA. These are changes made to the design of the service that provide default protections by settings or new permanent design features - including user accounts private by default, geolocation off by default and targeted advertising switched off for children

The ‘by default’ changes we recorded can make a substantial difference to children online – including all four companies setting social media accounts set to private by default, settings to de-prioritise eating disorder content in recommended feeds of content and safeguards to stop prevent unsafe content appearing in generative AI outputs.

There were also significant new default settings related to advertising to children. The DSA has the most explicit requirements related to advertising as it prohibits children receiving adverts based on profiling, if the platforms have a reasonable degree of certainty the user is a child. All four companies announced changes to comply with this provision in the DSA, in advance of it coming into force.

Changes in child safety and privacy changes announced by Meta, Google, TikTok and Snap from 2017 to 2024, categorised

Default settings are important as they provide a safer space for children to connect, explore and learn online without the immediate pressures of widespread or unwanted contacts or exposure to certain forms of content. Further research is now needed, focused on children’s real experience online, to see how effective these changes have proved in practice.

Online platforms are over promoting tools such as parental controls as the solution

The research also found that companies are introducing new tools to protect children’s safety and privacy. For example, tools that enable children to filter out comments, select a non-personalised recommended content feed and allow parents to set controls. The promotion of these tools rose in 2023, as DSA and OSA’s implementation grew closer.

While the AADC, DSA and OSA all recognise the role that tools can play as part of an overall approach to safety and privacy, there is a risk that parental controls are being disproportionately promoted as an effective safeguard. Parental controls may be viewed as an attempt to transfer responsibility to parents regarding the child’s use and potential exposure to risks.

Other research conducted by academics at LSE has found that evidence of parental controls having both beneficial and adverse outcomes, limiting other outcomes or simply having no outcomes. In evidence to the US Senate Committee on the Judiciary in early 2024 Snap revealed that only 400,000 children use parental controls out of 60 million users under 18. This indicates a usage rate of 0.67% and highlights the risk of overly relying on tools that are not regularly used by most children and parents.

Transparency gap

Research for this project proved challenging – we wrote to 50 companies and only 8 responded, and with limited information. We then relied on sourcing information from online searches, blogs, press releases about changes companies had announced. This process highlighted a lack of consistency in how information was presented and what was included in the announcement, including why the change was made and which jurisdictions it applied to.

Our report therefore contains several recommendations for companies, governments and regulators to improve record keeping and transparency about changes that are made. Our proposals recommend that Ofcom and the European Commission create a public ‘child online safety tracking database’ and companies should consistently publish information about changes in a portal that provides data via an API and in a machine-readable format. We also highlight the need for the OSA to contain a statutory requirement for accredited researchers to access data, as in the DSA’s Article 40.

Where next?

While our study has shown that legislation and regulation are starting to make a substantive impact, no one should be complacent about the steps companies still need to take to provide safe and inclusive environment for children online.

Regulators should continue to evaluate evidence about how solutions effectively work in practice. They should also feed their evidence into new and updated guidance as they learn more about effectiveness and where the bar should be set in expected practice. Our research also highlights the value of a design driven approach and building protections by default.

Digital Futures for Children are now planning another research study to return to these questions again and will publish a new report in 2026. We hope that further advancements in transparency will be made during this period, including the publication of risk assessments under the DSA and OSA. This research will also consider the impact of recently announced investigations by the European Commission into Meta and TikTok, and Ofcom’s codes of practice (published for consultation in May 2024).

Steve Wood is Director and Founder of PrivacyX Consulting, set up in 2022 to provide advice and research services focused on policy issues related to data protection and emerging areas of digital regulation. Steve is also a Visiting Policy Fellow at the Oxford Internet Institute, where he has been conducting research into the impact of social media recommender systems on children.

Previously, Steve worked at the UK data protection regulator, the Information Commissioner’s Office (ICO) for 15 years. He was Deputy Information Commissioner, responsible for policy between 2016 and 2022. During this time, he oversaw the development and implementation of the AADC.