PrivacyX substack #3: Generative AI and data protection - the story of 2023

Mapping the issues and applying solutions (and some looks ahead to 2024)

Well, a little later than planned, here is the next issue of the newsletter. October and November have been a busy few months for PrivacyX, as I finish off some projects and start work for some new projects in 2024.

If you are interested in working with PrivacyX in 2024 - the first half of the year is now busy but I should have some capacity later in the year. Drop me a line via LinkedIn if you’d like to chat.

As ever, if you like the newsletter, please share and spread the word.

In this month’s newsletter, I’ve reprised some of the content I’ve used at conference presentations on DP and generative AI over the last few months. It also hopefully works as a good summary of the distance we have travelled in 2023 in seeking to understand to how DP applies to generative AI.

I should add that these slides and notes don’t seek to capture every issue comprehensively and I will need to continue to refine and update the graphics.

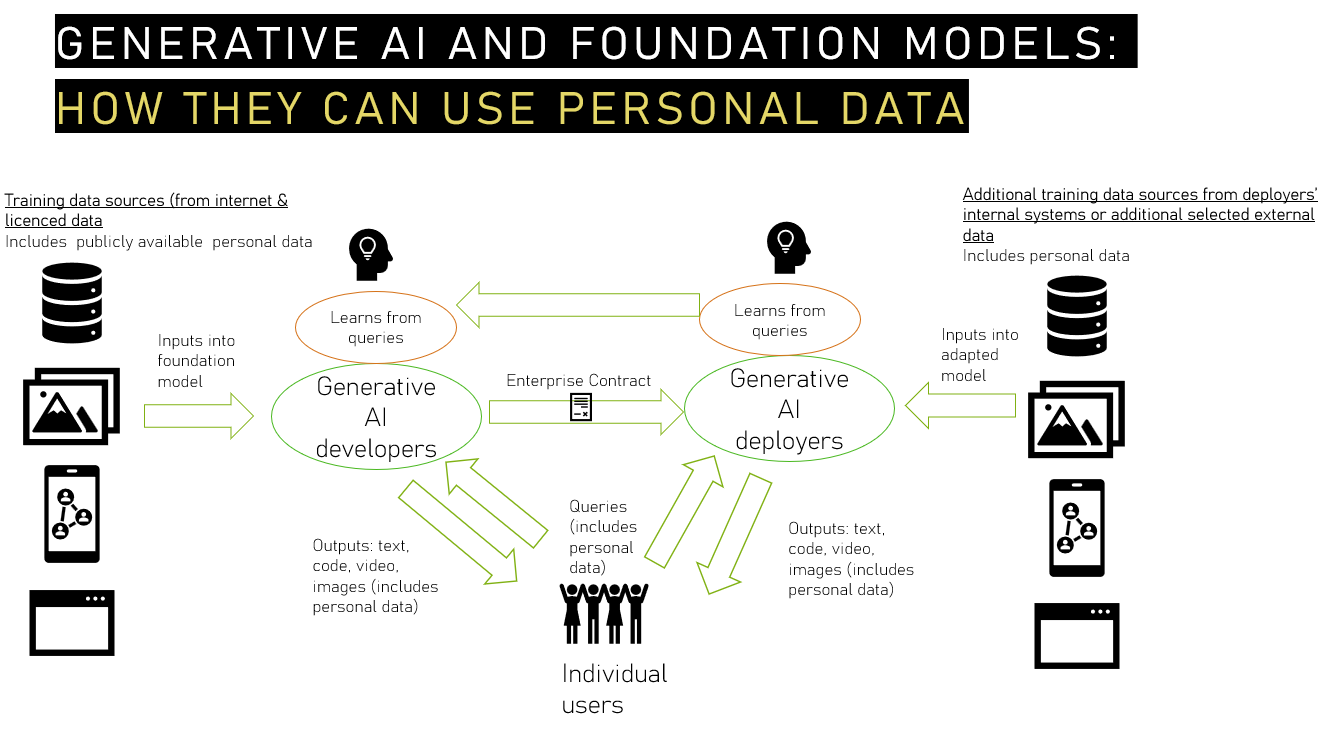

Personal data and the generative AI ecosystem

The first slide seeks to explain personal data flows in and out of the generative ecosystem and highlighting the complexity introduced when controllers will deploy foundational models from generative AI developers. At each key point DP risks must be assessed and mitigated.

In the second half of 2023 much of the focus has switched to regulating AI safety and existential concerns (see the UK AI safety summit), but we can’t lose sight of the key importance that data protection rights and principles, data governance, data protection by design and privacy engineering play as key foundations in AI safety.

A crucial issue for 2024 will be how AI deployers clarify their relationship with developers - where liability and responsibly will lie.

This next slide seeks to map out the different risks and challenges that have emerged over the last year, under eight headings. Some of these challenges are significant, such as how the right to erasure interacts with the tokenised approach of foundation models. This doesn’t mean we should give up on these rights but it will require data protection authorities (DPAs) to think deeply about what effective application means in practice, rather than theory. This should mean that DPAs demand more from industry in privacy engineering and solutions, but a pragmatic outcome based interpretation of GDPR is needed to retain the relevance of the rights and the importance of data governance.

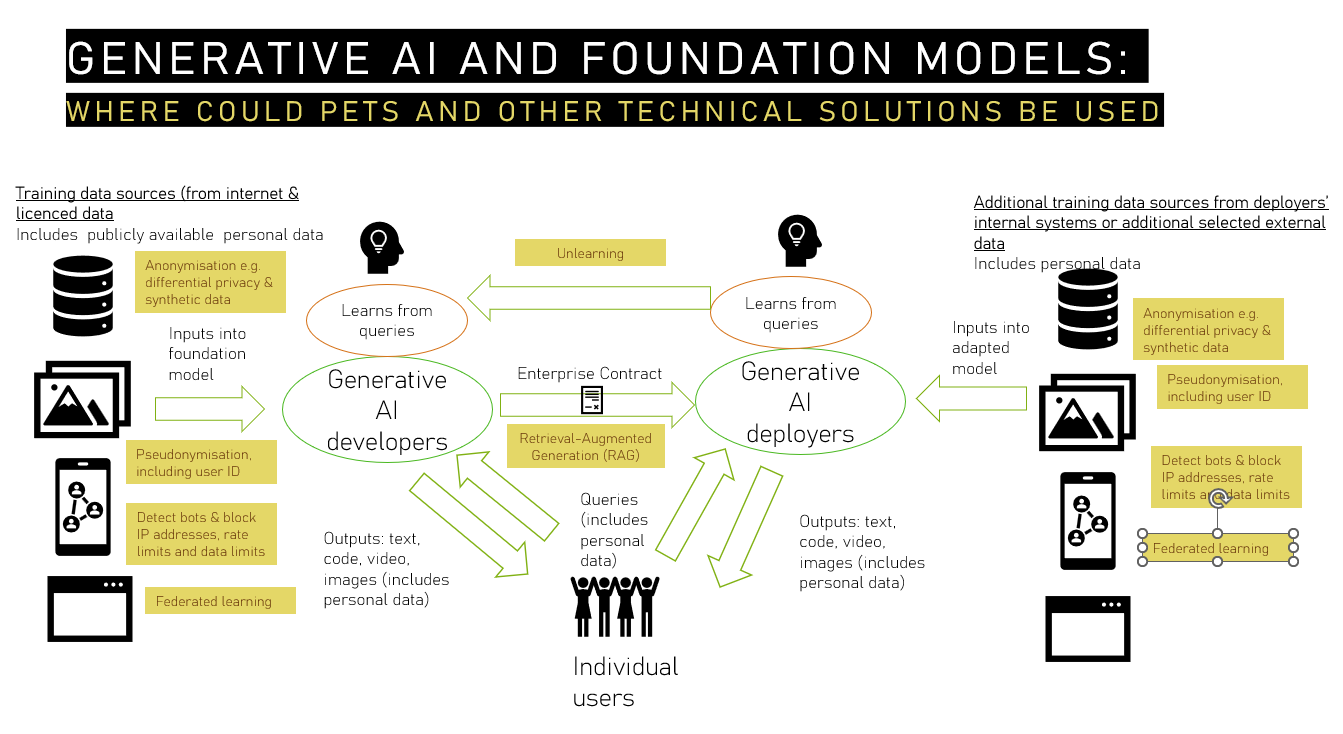

The solutions generative AI data sources, developers and deployers have implemented in 2023

The range of data protection risks are complex and may vary depending on whether the controller is a data source, developer or deployer (or undertaking multiple roles). As we’ve seen throughout the year, there has been frequent reference to the importance using data protection assessments to identify and mitigate the risks. There is still some uncertainty about what mitigations data protection authorities will recognise as sufficient and where responsibilities between developers and deployers begin and end.

The release of the UK ICO guidance on privacy enhancing technologies (PETs) has also provided a much needed overview of what techniques may used related to AI (not just generative AI) and what situations they could be used in.

Looking ahead to 2024, advances in “unlearning” may be a critical area of examination and a test of how pragmatic and patient DPAs may be about implementation of solutions.

Here’s a summary of some of the solutions we’ve seen to the challenges:

Use of techniques to prevent data scraping, such as rate limits and IP address blocking.

Rejection or removal of personal data from training data before the model is developed.

Foundation models reject queries for private or sensitive information about people.

Use of anonymisation or pseudonymisation techniques to reduce of the risks of identification from training data.

Use of federated learning to enable data minimisation and reduce identification risks.

Developers allowing website operators to specifically disallow crawlers.

Developers not training and learning from enterprise deployments of their foundation model and allow the deployment to control data retention.

Use of retrieval-augmented generation RAG) : “It ensures that the model has access to the most current, reliable facts, and that users have access to the model’s sources, ensuring that its claims can be checked for accuracy and ultimately trusted” (IBM). Also see video below.

Opt-out of training uses of chat history data for individual users.

Use of unlearning to remove or modify predictions. (still an emerging science - see this article by Shaik et al. and this one by Hine et al.) but holds important promise for 2024. Part of the wider concept of ‘model disgorgement’.

Improved privacy notices and information about data training and classification process, including safety cards.

New online forms and procedures for data protection rights - including subject access and objection.

Revised enterprise contracts and data protection agreements between developers and deployers.

Guidance and tools from developers to support safe deployments.

If RAG is new to you, this IBM video is a great starting point.

Actions underway by Data Protection Authorities on generative AI

Globally, data protection authorities (DPAs) have already taken action to set out their key expectations related to the implementation of generative AI. Firstly, the G7 group of the data protection of authorities issued a joint statement on generative AI in June 2023. Their key areas of concern:

Legal authority for the processing of personal information

Risks to children

Security safeguards

Mitigation and monitoring measures of generated output

Transparency measures to promote openness and explainability

Production of technical documentation across the development lifecycle to assess the compliance

Technical and organizational measures to ensure individuals can exercise their rights

Accountability measures to ensure appropriate levels of responsibility among actors in the AI supply chain

Limiting collection of personal data to only that which is necessary to fulfil the specified task.

In October 2023, the Global Privacy Assembly (the international network of DPAs) issued a declaration on generative AI, that built on the G7 statement. The resolution included a call for co-ordinated enforcement on generative AI. We have already seen considerable enforcement co-ordination by GPA members in the case of the facial recognition service offered by Clearview.

It seems likely that DPAs will target a range of controllers - data sources, developers and deployers. But so far a lot of the attention has focused on OpenAI and ChatGPT.

This great blog by Gabriela Zanfir-Fortuna at the Future Privacy Forum, provides a comprehensive overview of the enforcement action taken so far in 2023.

The Irish Data Protection Commission also engaged with Google ahead of the launch of the Bard generative AI tool and the launch was pushed back to accommodate their feedback. Ex-ante supervision and engagement will also be an important approach for DPAs, while recognising that the engagement is often voluntary and the companies concerned are not seeking formal approval (or statutory consultation of their DPIA under GDPR).

In August 2023 a group of 12 DPAs set out their expectations related to data scraping and the measures they expect controllers to take to comply with data protection and privacy laws globally. In November the Italian DPA, the Garante, announced an consultation on data scarping for algorithm training purposes.

In 2024 we await the outputs of the European Data Protection Board’s Generative AI taskforce, set up in April 2023. It was set up rapidly in response to the massive debate that exploded in the spring of this year. To ensure relevance and impact it will be important that the taskforce produces guidance early in the new year, with a clear roadmap in terms of consultation and key questions to be resolved. Issues such as transparency, accuracy and hallucinations, how the right to erasure applies to the tokenised approach of foundation models, are all areas stakeholders expect guidance on.

DPA guidance

The UK ICO has issued a short blog on 8 key questions related to generative AI. Though also noting that the ICO has already published extensive guidance on AI more generally.

In France the CNIL have published a “AI how-to sheets” that will enable controllers to walk through AI compliance step by step.

More coordination to come into 2024

Overall, we can expect greater coordination between DPAs and other regulators (such as competition, consumer and online safety) in 2024, as GDPR forms part of the wider framework of digital regulation for AI.

The UK Digital Regulatory Co-operation Forum is already leading the way on this topic, with other initiatives underway in Canada and Australia.

We of course await the outcome of the trilogue on the EU AI Act and the steps on EU coordination once the Act is passed. If the passage of the AI Act is delayed, past the European Parliament elections in 2024, we can expect a greater spotlight to play onto EU data protection in 2024.

In 2024 the learning curve for all in the data protection industry will still be steep!

Re-use and copyright

If you would like to re-use the article content and/or the infographics they are available under a Creative Commons BY-NC license. This allows re-users to distribute, remix, adapt, and build upon the material in any medium or format for noncommercial purposes only, and only so long as attribution is given to the creator.

Other articles I’ve published:

Children’s privacy and freedom expression

I teamed up with ex UK Information Commissioner Elizabeth Denham to write an article about our experience of developing the Age Appropriate Design Code (AADC) here in the UK and how we addressed the questions related to freedom of expression. This intersection is a major issue in the US right now, with the Court ruling in favour of an injunction (sought by Netchoice) of the California Age Appropriate Design Code, on first amendment grounds. The case is now heading to an appeal next year and it’s vital we find a way through these tensions to ensure that safety by design frameworks such as the AADC can have statutory force and increase the incentives needed for privacy engineering and data protection by design that takes account of children’s best interest from the outset. Read the article over on the IAPP website.

Coming soon in future newsletters

I also have some content already under development on two topics - age assurance and the digital risks for elections in 2024. These should be out as newsletters #4 and #5 early in the new year.

In the meantime have a restful winter holiday and best wishes for 2024.

Steve